This AI “black box” aims to make operating rooms as safe as planes (Behind the Scenes, Part 1)

Surgery and aviation have more in common than you think. Let me take you behind the scenes of my first piece for MIT Tech Review.

This week, I wanted to take a break from our regularly scheduled (stigma) programming to take you behind the scenes my latest journalism article. For MIT Tech Review, I explore the OR black box and how AI is shaking up surgery, surveilling doctors to improve safety. Read the full story here; then come back to get the behind-the-scenes this story.

Let’s step back to 1977

It was a stormy night in Tenerife. The rain beat down on the Spanish island’s small airport, and a dense fog prowled in from the sea, covering the tarmac in grey.

Inside Pan Am Flight 1736, the passengers were getting restless. They’d boarded in Los Angeles almost 13 hours ago and were now stuck on this tarmac, diverted to Tenerife and unable to leave. So, when the announcement came that Pan Am was cleared to taxi, the aircraft erupted in cheers.

A few minutes earlier, the control tower had instructed another plane, KLM Flight 4805, to similarly get ready for takeoff. There was only one runway at the airport, so KLM rumbled to one end and made a 180-degree turn, the full length of the tarmac now in front. Pan-Am was still in its way, inching into a holding space, but with the fog so thick and the runway lights out of service, the control tower couldn’t see either 747 — and neither could the pilots.

The KLM captain, however, was eager to leave. The weather conditions were awful, and if visibility got worse, he’d be stuck in Tenerife overnight. So, as the control tower cleared the KLM flight to get ready for takeoff, the captain began barreling forward.

KLM: “Roger, sir, we are cleared to the Papa beacon, Flight Level 90 until intercepting the 325. We're now at takeoff.”

Control Tower: “Okay...”

Two seconds passed, the control tower unsure if KLM was at the takeoff position, or taking off.

Control Tower: “Standby for takeoff. I will call you.”

Pan Am: “We are still taxiing down the runway!”

KLM only heard the initial “Okay,” those final two messages coming simultaneously and melding into static. By the time Pan Am saw KLM’s lights break through the fog, the planes were only 2000 feet away. Pan Am desperately throttled its engines, trying to drive off into the grass, while KLM pulled up its nose to lift off, its tail dragging across the runway in a spatter of sparks.

It wasn’t enough. The two planes crashed into each other, KLM’s left wing slicing through Pan Am’s upper deck, tearing it off. The Pan Am first officer instinctively reached up to turn off the engines, but there was no roof over his head, only a smoldering orange. The KLM flight soared for a few more seconds before slamming onto the runway, exploding. Everyone on board was incinerated instantly.

How Tenerife shapes AI today

Stanford professor Teodor Grantcharov is obsessed with Tenerife. He was six years old when the disaster happened on March 27, 1977, and he has been studying it ever since, searching for clues as to what went wrong. Here were incredibly experienced pilots with the best technologies of their time on some of the most iconic airplanes. But 583 people still died.

The Tenerife disaster was a turning point for aviation safety, with sweeping changes made to ensure this accident could never happen again. In 1970, the industry was plagued by 6.5 fatal accidents for every million flights; today, that rate is less than 0.5.

Grantcharov is a surgeon, not a pilot, but he lists off these safety statistics with palpable envy. In medicine, errors remain far too common, with estimates ranging from 22,000 preventable hospital deaths per year to 250,000 (from a relatively controversial estimate). Regardless of the exact number, in surgery, mistakes can range from wrong-site, wrong-procedure, or wrong-patient errors to forgotten gauze, wire cutters, and scalpel blades left inside peoples’ bodies.

While none of these examples are quite as dramatic as Tenerife, Grantcharov thinks that if you were on the operating table when the surgeon screwed up, the error might as well be a plane crash. He points to remarks from Captain Sully — from the Miracle on the Hudson — that if aviation errors killed as many people as medical ones, “there would be a nationwide ground stop, a presidential commission, congressional hearings… No one would fly until we’d solved the fundamental issues.”

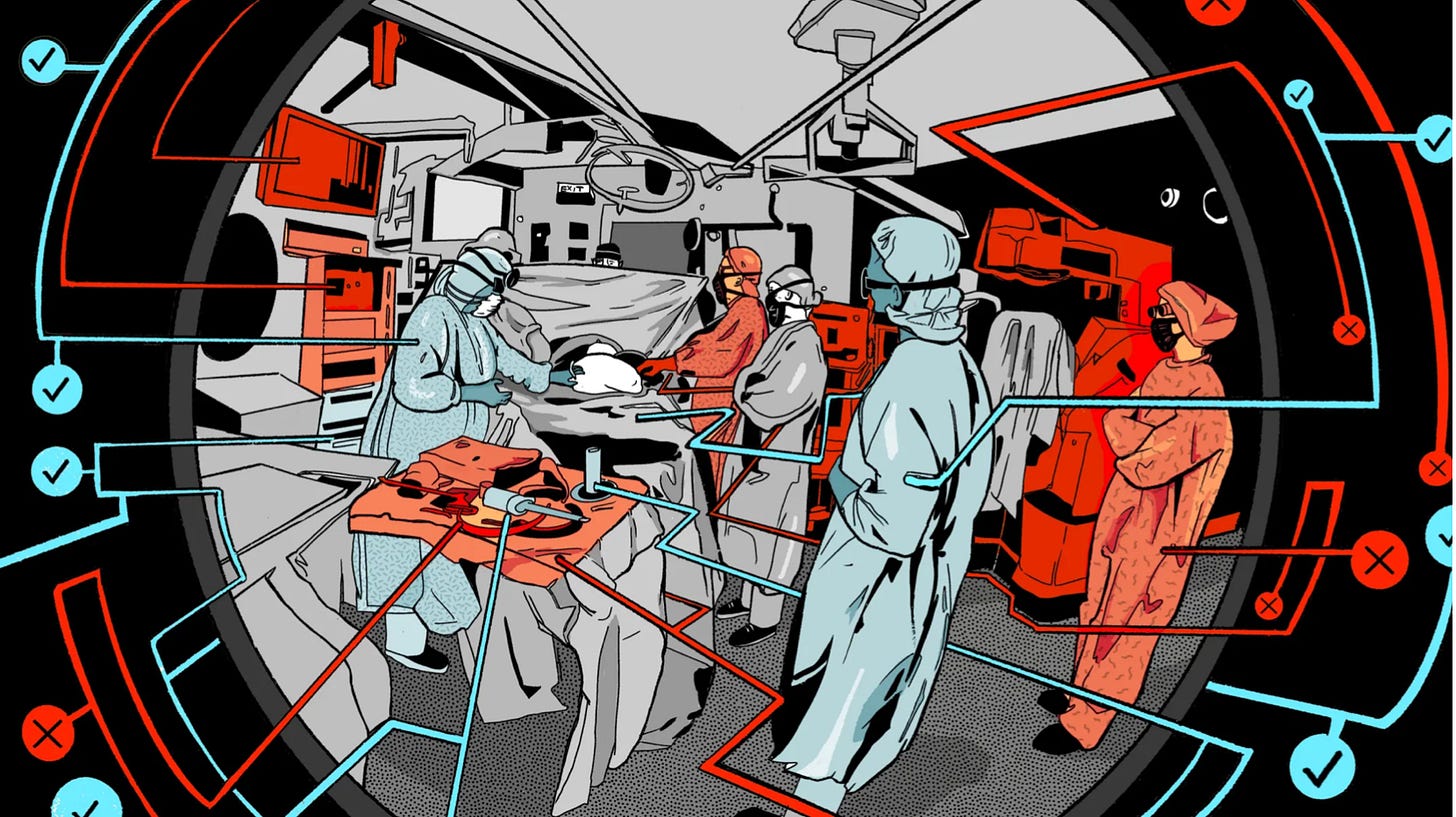

Enter the OR black box, an artificial intelligence platform that records and analyzes data from wall-mounted cameras, ceiling microphones, and anesthesia monitors. In 2014, Grantcharov installed the first prototype at St. Michael’s Hospital; a decade later, his technology has been deployed into nearly 40 institutions globally, including surgical behemoths like the Mayo Clinic and Duke.

But what would it mean to collect all this data? Critics say the U.S. is already a surveillance state of sorts, with more and more of our lives monitored, stored, and monetized. The operating room, however, has long been a bastion of privacy — and some surgeons don’t want video cameras watching over them. In fact, at some hospitals, surgeons have refused to operate when the black boxes are in place, and some of the systems have even been sabotaged.

My piece in MIT Technology Review dives into the tech behind Grantcharov’s black box but also asks a central question around surveillance culture: “Are hospitals on the cusp of a new era of safety—or creating an environment of confusion and paranoia?”

Read the piece for my answer to that question. But in this newsletter, I wanted to talk about two concerns about the OR black box that didn’t make it into the article: 1) the Hawthorne Effect and 2) the company’s deletion policy, where all data are deleted 30 days after an operation.

Hawthorne Effect

The Hawthorne Effect is an idea in psychology that people change their behavior when observed. It gets its name from an experiment conducted at the Hawthorne Works Electric Company in the 1920s and 1930s, where researchers explored whether changing lighting would increase employees’ productivity. When the lighting increased, productivity increased—and also when the lighting decreased. But after the study ended and the scientists left, productivity decreased. It wasn’t the lighting changing things; it was the pesky researcher in the corner taking notes.

Teneille Brown, a law professor at the University of Utah, worries that with a surgeon’s every step being recorded, they might change their practice. For example, perhaps surgeons start ordering more unnecessary tests and procedures, or avoid high-risk patients or particular operations, to cover their tracks and avoid potential liability. A manifestation of the Hawthorne Effect, this phenomenon is commonly known as “defensive medicine.” Not only might healthcare expenditures increase as a result—defensive medicine already costs $55.6 billion—but it might also even harm patients. “Physicians already overestimate the risk of being sued by 11 times,” says Brown. “They're the most risk-averse, freaked-out people.”

Grantcharov recognizes this risk and has acknowledged it in his previous research papers. But he also believes that his platform minimizes the risk since the black consists only of a handful of microphones and dome cameras. “It’s not like a person with a huge camera walking around the area and taking a picture, or a person with a pen and paper taking notes,” he says .

30-Day Deletion Policy

Grantcharov’s company deletes black box data within 30 days — in order to minimize the risk of recordings being used in malpractice proceedings. In fact, Brown says that it would be nearly impossible to find representation, go through the required conflict-of-interest checks, and file a discovery request within this time frame.

Still, the idea of regularly deleting data feels somewhat antithetical to the ethos of science and, according to McGill University historian of medicine Thomas Schlich, is somewhat unusual. But Grantcharov justifies it in the name of protecting surgeons and by the very nature of medical errors. “They’re not happening because the surgeon wakes up in the morning and thinks, ‘I’m gonna make some catastrophic event happen,’” says Grantcharov. “This is a system issue.”

While there are indeed many moving parts in surgery, Michelle Mello, a professor of law and health policy at Stanford, thinks that all the talk of system problems understates the role of individual errors. “Over 90% of cases, there is an individual failure. There might also be a team dynamic failure, but there’s not a bunch of blameless individuals who just interacted with equipment in a certain way,” she says. “That’s not really how it goes down.”

A medical malpractice attorney, who wasn’t authorized by his firm to go on the record, agrees with Mello. “This whole notion of systemic errors: that’s a whole lot of bullshit,” he says. “We don't want to blame any one particular individual. But guess what? Accountability can’t just be on the whole hospital.” Systems are made of individuals, and these surgical recordings could help ferret out bad actors.

So then why delete data after 30 days or deny patients’ access? “There's no legitimate reason because this isn’t opinion. This is fact that's being recorded in real time,” he says. “If you have nothing to hide, you don’t have anything to worry about.”

Read Part 2 of this Behind-the-Scenes series of my MIT Tech Review article, where I will delve into the medico-legal implications of Grantcharov’s black boxes. If the courts get a hold of black box recordings, would surgeons be inundated with lawsuits? Could OR black boxes revolutionize the malpractice system — for the better?